Understanding Generative AI (Part 2): Microsoft Azure-hosted LLMs and Data Privacy

In the second part of our blog post we will specifically discuss how a Microsoft Azure-hosted LLM can be a good solution to ensure data privacy. We also prepared a useful checklist for companies to evaluate LLM providers.

How Microsoft Azure-Hosted LLMs Can Give You More Control Over Your Data

For organizations that want an extra degree of control over their data, using LLMs through Microsoft Azure is an attractive option. Azure offers the Azure OpenAI Service, a cloud-based service where OpenAI’s models (like GPT-4) run in Microsoft’s Azure datacenters, as well as options to deploy other models or even fine-tune and host your own. Choosing an Azure-hosted LLM can help address data privacy concerns in several ways:

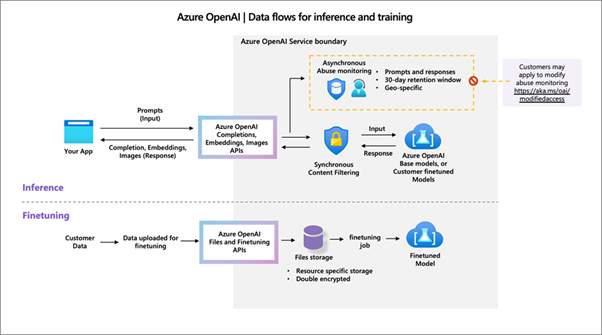

Figure: Data flow in Azure OpenAI Service for inference and fine-tuning, showing that all customer prompts and completions stay within Azure’s environment. Data is processed within the Azure OpenAI service boundary (with region-specific handling and a default 30-day retention for abuse monitoring), and is not shared with OpenAI or any other customer. Fine-tuning data is stored in a dedicated, encrypted location, and any fine-tuned model is available exclusively to the customer.

- Data Residency and Sovereignty: When you deploy an LLM via Azure, you can choose the region (e.g. Western Europe, Northern Europe, Germany, etc.) for your Azure OpenAI instance. Microsoft guarantees that all customer data at rest remains in the region you select. For European companies, this means you can ensure data stays within EU borders by selecting an EU region. (Be mindful to choose model deployments that are region-specific – Azure notes that some globally distributed models might process data in other regions, so pick a region-specific option for compliance). By keeping data in-region, you avoid the complexities of international data transfer under GDPR. Microsoft’s services, in general, are designed to meet EU Data Protection requirements, and Microsoft has contractual commitments to GDPR for its customers.

- No Sharing with OpenAI or Others: A big advantage of Azure’s managed service is the isolation of your data. According to Microsoft, when you use Azure OpenAI, your prompts, completions, embeddings, and training data are not accessible to other customers and not sent to OpenAI (the company) at all. In fact, they explicitly state that your data is not used to improve OpenAI’s models, nor Microsoft’s models, nor any third-party services. The OpenAI models running in Azure are essentially a secured copy running within Microsoft’s cloud – the service does not call home to OpenAI’s servers. This means the relationship is straightforward: your company (controller) -> Microsoft (processor). OpenAI as an external party isn’t in the data flow, which can simplify DPA arrangements (it will be covered by Microsoft’s standard online services terms, which include GDPR terms and often the EU Standard Contractual Clauses by default).

- Fine-Tuning and Custom Models with Privacy: Azure OpenAI allows you to fine-tune models on your own data securely. When you upload training data for fine-tuning, it is stored in an isolated Azure Storage instance in your region, and is encrypted (even double encrypted, per Azure documentation). The fine-tuned model that results is available exclusively for your use – it’s not shared or used to benefit other Azure customers. This is important: if you invest in customizing an AI on your proprietary data, you don’t want that data or the tuned model to leak out. Azure ensures that it remains your asset. From a GDPR perspective, any personal data in training material is still under your control, and you can delete the training data from the storage when done. Microsoft, as processor, will act on your instructions to remove or retain it as needed.

In essence, using Azure-hosted LLMs gives companies a way to leverage cutting-edge AI within a framework of enterprise-grade security and compliance. You get the benefit of cloud scalability and advanced models, but with options to keep everything locked down: data stays in Europe, only your org accesses it, nothing feeds back to the model provider, and you have logs for oversight. Of course, this comes with extra complexity and cost.

AI Providers and Data Privacy – A Checklist

Finally, below you will find a checklist we have prepared that you can use to evaluate LLM providers if you decide to choose an LLM provider other than OpenAI.

1. Legal Role & Contracting

- Processor-only? The vendor confirms it will act purely as a data processor under GDPR, with your organisation remaining the controller. CNILEuropean Data Protection Board

- EU representative / establishment If the vendor is outside the EU, do they name an EU representative and rely on Standard Contractual Clauses (SCCs) or the EU-U.S. Data Privacy Framework for any transfers? European Data Protection Board

- Clear sub-processor list All downstream service providers are disclosed, with advance-notice/opt-out rights for any changes.

2. Data Use & Retention

- No training on your data by default (opt-in only). Check the policy; you should not need to opt out. Artificial Intelligence Act

- Configurable retention window. 30-day or shorter logs are acceptable, with a zero-retention option for sensitive workloads.

- Secure deletion on request. Inputs, outputs and any fine-tuning artefacts can be wiped when you leave or sooner if required.

3. Data Residency & Transfers

- EU data-residency option. Provider can guarantee that inference and any stored data stay inside an EU geography you pick (no hidden replication).

- Transparent data-flow map. End-to-end diagram shows where data is routed and stored (incl. backups).

In your company, what LLMs (or any other AI tools) do you use? What are your experiences outside of OpenAI and Microsoft tools? Are there any that could be categorized as high-risk? Why not talk about it over a good cup of coffee?