Data Anonymization: Personal Data in Disguise

(updated: October 21, 2025)

GDPR: Strict Regulation, Heavy Fines

More than seven years ago, on May 26, 2018, the GDPR regulation came into force. We know that non-compliance with the GDPR can carry heavy fines: in 2023, a record €1.78 billion in fines were imposed in Europe. Most notably, the Irish Data Protection Commission (DPC) fined Meta €1.2 billion in May 2023.

The Netherlands holds the record for the highest number of data breach notifications per 100,000 people (150.7). Interestingly, the Dutch Data Protection Authority (Autoriteit Persoonsgegevens) has even fined the Dutch Tax and Customs Administration (Belastingdienst) €3.7 million for unlawful processing of personal data.

In Germany, the record is held by H&M with a €35 million fine imposed by the Hamburg Data Protection and Freedom of Information Commissioner (Hamburgische Beauftragte für Datenschutz und Informationsfreiheit, BfDI). In Lower Saxony, an online shop was recently fined €10.4 million.

Anonymization of Personal Data – Yet Another Challenge

According to the recommendations issued by local financial supervisory authorities or the central bank in most EU member states, only the usage of anonymized personal data is allowed in development, testing and analysis environments. For example, in Germany, this is stated in Section 27(3) of the Federal Data Protection Act (Bundesdatenschutzgesetz, BDSG).

In addition, the preamble of the GDPR regulation states in Section (26): “The principles of data protection should (…) not apply to anonymous information, namely information which does not relate to an identified or identifiable natural person or to personal data rendered anonymous in such a manner that the data subject is not or no longer identifiable. This Regulation does not therefore concern the processing of such anonymous information, including for statistical or research purposes.”

Why Data Anonymization Is Becoming a Strategic Business Imperative

In a digital economy built on data, organizations are under growing pressure to strike a balance between data utility and privacy. Data anonymization — a process that removes personal identifiers from datasets — has emerged as a crucial solution for complying with GDPR, while still enabling valuable data-driven innovation. By anonymizing sensitive data, companies can mitigate legal, reputational, and financial risks without compromising their ability to derive insights from that data.

But anonymization is not just about risk reduction. It opens the door to safe data reuse across development, testing, and analytics environments. Properly anonymized data can be shared internally or with partners, used for machine learning model training, or repurposed for analysis, without violating privacy regulations or trust. This capability can accelerate digital transformation while maintaining compliance, especially in sectors like finance and healthcare where data privacy is key.

Still, anonymization is not without its challenges. Organizations must navigate a complex trade-off between data utility and privacy guarantees. As real-world case studies show, generic one-size-fits-all anonymization often results in unnecessary data degradation. A more strategic, use case–specific approach, where anonymization techniques are tailored to the actual purpose of data reuse, can preserve analytical value while upholding privacy standards. For business leaders, this means investing in processes and technologies that enable privacy-preserving data operations without stalling innovation.

How to Anonymize Personal Data

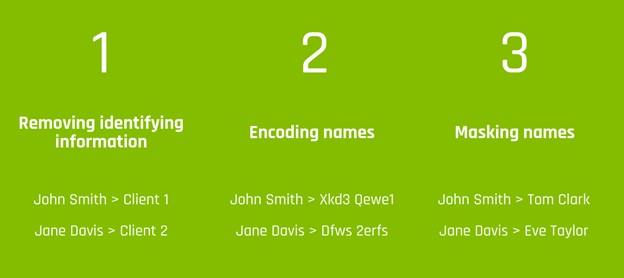

There are three principal ways to anonymize personal data:

- by removing the identifying information – so here, instead of John Smith and Jane Davis, we have Client 1 and Client 2

- by completely encoding the data – for example, John Smith is changed to Xkd213sd Qewew5354

- by masking, i.e. using randomly generated pseudonyms – in this case, meaningful pseudonyms are created that retain the characteristics of the original personal data, e.g. John Smith becomes Tom Clark and Jane Davis becomes Eve Taylor

The basic objective of protecting personal data can be achieved by applying the first two methods. However, in case the database containing personal data is used in a development or testing environment where routine development and testing processes take place (and data protection must be ensured in such cases as well!), it is important that the anonymized data remains meaningful and consistent – with meaningless code or missing personal data, this condition is obviously not met.

Data Anonymization Made Easy, Across Multiple Systems

To maintain the protection of personal data in databases in development and test environments (while keeping the anonymized data meaningful), DSS Consulting has developed a comprehensive solution that is already used with satisfaction by many of our clients (including financial institutions, insurance companies, telecom operators). Our solution, DSS Data Anonym

- automatically detects and encrypts data in the systems involved

- supports anonymization across multiple systems, while consistently maintaining business context between data

- easily integrates with large enterprise systems

- provides full audit logging and standardized authentication management

Do you think that anonymization of personal data could be relevant for your company as well? Why not discuss this over a cup of coffee?